In this post, I respond to July 2023’s T-SQL Tuesday #164 Invitation and talk about emotive code on the Amiga.

Table of Contents

Introduction

This month’s T-SQL Tuesday comes from Erik Darling. To paraphrase his invitation:

Think back to the last time you saw code that made you feel a thing. Hopefully a positive thing. Think along the lines of: surprise, awe, inspiration, excitement. Or maybe it was just code that sort of sunk its teeth into you and made you want to learn a whole lot more.

Now, while Erik’s invitation mentions the last time, I want to talk about the first time. This is an ideal opportunity to expand on something I wrote on my About page about AMOS tutorials. But before I start explaining why I consider some mid-nineties Amiga code to be emotive, I should explain a few things.

Key Topics

This section introduces some of the key topics I talk about in this post.

Amiga

The Amiga home computer was first released by Commodore in 1985, and was one of the first computers with custom graphics and sound hardware.

The Amiga was designed to compete with the Apple Macintosh and the IBM PC. It had a custom chipset and a multitasking operating system. These made the Amiga appeal to a wide range of audiences including:

- Businesses wanting to run multiple applications simultaneously.

- Artists such as Andy Warhol.

- Musicians like Aphrodite, Equinox, DJ Zinc and Calvin Harris,

The Amiga also had expansion capabilities, with various third-party add-ons available like the Video Toaster production tool. This allowed the Amiga to be used for visual effects in TV shows like Babylon 5 and SeaQuest DSV, and films like Apollo 13 and Titanic.

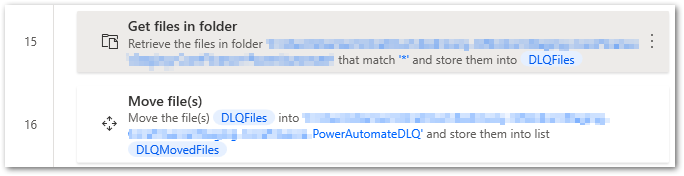

The Amiga Wikipedia page has information on history, chipsets, trivia and more.

Amiga Format

Amiga Format was a monthly UK computer magazine published from 1989 to 2000.

Broadly, there were three types of Amiga magazines:

- Game-focused magazines like Amiga Power (Yayyy – Ed).

- Serious magazines like Amiga Shopper.

- Magazines with some of both, like Amiga Format.

Amiga Format was about 50% games to 50% serious. This expanded to their coverdisks, with a fairly even split of utilities and game demos across each month’s disks.

My first Amiga Format was issue 30, purchased alongside the family Amiga 500+ Cartoon Classics bundle:

And I still have them both! Young me chucked the magazine around a fair bit, so years later I bought a copy from eBay in better condition. I’ll get some photos on Instagram when I get a chance.

The Amiga Format Wikipedia page has some additional information including some regular features.

AMOS

AMOS (or to give its full name AMOS BASIC) is an Amiga dialect of the BASIC game programming language.

First published in 1990 by Europress Software, AMOS was well received and sold over 40,000 copies worldwide.

Interaction comes via the AMOS Editor: a text editor with the AMOS language built into it. This allows users to type, edit, save and run AMOS programs from within the editor, without the need for a separate runtime tool or compiler.

The AMOS Wikipedia page goes into more technical detail, and this PowerPrograms page has a user’s AMOS perspective.

In addition, AMOS Professional has a GitHub repo, and I would be remiss if I didn’t include Dan Wood’s excellent AMOS retrospective video:

From AMOS To YAML

In this section, I talk about the Amiga code that ended up being so emotive and indirectly set my future career in motion.

Scene Setting

It’s Christmas 1992, and I’m a young shark pup still in single digits. I hit the newsagent’s magazine shelves with pocket money in hand and buy that month’s Amiga Format:

Yes – it does say January 1993 on the cover. Before writing this post I never had a good explanation for this, so I reached out to the Amiga Addict Discord for some clarity.

It turns out that, during this period, Amiga Format produced issues every four weeks instead of monthly. Former Amiga Format editor Marcus Dyson speaks about this at around 38:30 in Maximum Power Up podcast episode 139.

Throughout 1992, Amiga Format’s coverdisks featured a series of fully-functional software packages known as The Amiga Format Collection. This collection included:

- Fractal landscape generator Vista (AF33)

- HAM paint and animation package Spectracolor Jr (AF35)

- Machine code assembler Devpac 2 (AF39)

Adorning Amiga Format 42’s cover was AMOS:

I’d read about AMOS before. Amiga Format issue 35 had a demo of Easy AMOS, and even the games-centric Amiga Power mentioned AMOS in its Public Domain section. And now, suddenly every Amiga Format buyer had an unrestricted copy of it.

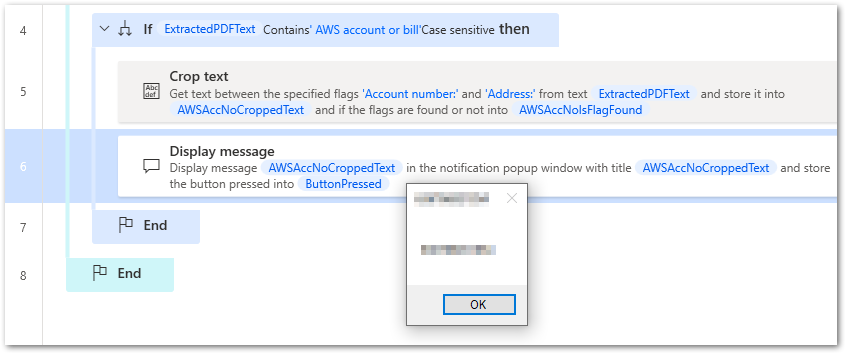

Amiga Format partnered this with a Learning AMOS magazine section. Basic principles, syntax and screenshots were introduced across nine pages, with the tenth page having a tutorial for a scrolling text demo. This was to be the first part of a monthly Mastering AMOS series.

What A Pong

Now, young me didn’t pay much attention to this part of issue 42. It was Christmas after all, and I had demos of Lemmings 2, Fire & Ice and Bill’s Tomato Game to keep me busy!

Enter Amiga Format issue 43. This month’s AMOS tutorial was Solo Pong – a simple version of Pong with the player controlling both bats. This was deliberate – the focus here was on learning AMOS as opposed to learning coding.

Young me was intrigued! The scrolling demo didn’t really appeal, but this was a game! The complete script was only half a page long, and the tutorial explained each line. So I gave it a shot, and within an afternoon I had my very own Pong clone!

The code itself made no sense to me – I was copying words from a magazine onto a screen and hoping for the best. But I found the process itself captivating. The idea that all the software I’d run on my Amiga was made by words on a screen – and words that I could type myself at that – was a revelation!

On an unrelated note, Amiga Format 43 included the last of the Amiga Format Collection series – the database package Prodata. So not only did issue 43 introduce me to coding – it also foreshadowed my eventual tech career!

Mastering AMOS Legacy

Amiga Format continued the Mastering AMOS series until issue 51. They went on to include AMOS Professional with issue 67, and ran an Ultimate AMOS tutorial series from issue 68 to issue 73.

Damien Junior didn’t do much more with the Mastering AMOS series after Amiga Format 43. I vaguely remember having a go at the Amiga Format 44 tutorial, which added some bells and whistles to the existing Pong code. But after that, it started going over my head and I lost interest. Although to be fair, I was a child!

The coding curiosity remained. As the 1990s went by I found myself trying out Amiga Format’s DevPac 2 and Blitz Basic tutorials, and by the end of the 1990s I was learning Excel formulae and VBScript at school. During the 2000s I started using HTML, CSS and PHP, and began using T-SQL, Powershell and DAX at work in the 2010s.

Which brings me to the present day, where I find myself learning Python and experimenting with reStructuredText (for Read The Docs) and YAML (for CloudFormation). And I thought, after thirty years, why not revisit the Amiga Format tutorial and see how I feel about AMOS now?

Thirty Years Later

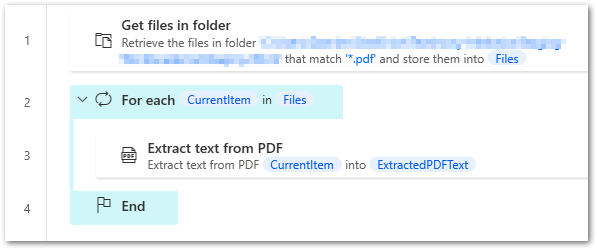

Like many self-respecting Amiga fans I have WinUAE installed on my laptop, and an ADF of the AMOS coverdisk isn’t hard to find. So I fired everything up! And, after thirty minutes of typing, squinting and error troubleshooting, witness the result of my mighty endeavour – SHARK PONG:

I clearly wasn’t squinting hard enough though. In this script, AMOS uses the ball’s X coordinate to determine if a player has lost. Where the tutorial’s LOSE conditions are:

If X>=320 Then LOSE : Goto RESTART

If X<=-20 Then LOSE : Goto RESTARTI missed the minus in the second IF statement and wrote:

If X<=20 Then LOSE : Goto RESTARTThus creating a version of Shark Pong that Left Paddle can never win, as the losing X coordinate is in front of the paddle:

But then again, it wouldn’t be an early 90s tutorial follow-along without an unintended and slightly hilarious bug, right? And the command to stop running the code? Control-C. That broke my brain briefly.

But yeah. Revisiting the same code that captivated me 30 years ago was a blast! The original tutorial code is in my GitHub for those curious to see it, both as an unformatted text file and in glorious BlitzBasic!

Further Reading

- The Internet Archive has an in-browser viewable PDF of the AMOS Pong tutorial in Amiga Format issue 43 and many other issues.

- Commodore Bombjack has PDF downloads of all Amiga Format issues.

- Amiga Source Preservation has a free library of AMOS literature.

Summary

In this post, I responded to July 2023’s T-SQL Tuesday #164 Invitation and talked about emotive code on the Amiga.

I had a lot of fun writing this! A phrase like ’emotive Amiga code’ might not sound fascinating on paper, but it was interesting to revisit that tutorial after so long. I even finally answered a question that’s lingered in my head for a few decades.

Thanks to Erik for this month’s topic! My previous T-SQL Tuesday posts are here. If this post has been useful, please feel free to follow me on the following platforms for future updates:

Thanks for reading ~~^~~