In this post, I use popular Python modules & AWS managed serverless services to extract WordPress API data.

Table of Contents

Introduction

Last year, I tested my Python skills by analysing amazonwebshark’s MySQL database with Python. I could have done the same in 2024, but I wouldn’t have learned anything new and it felt a bit pointless. One of my YearCompass 2023-2024 goals is to build more, so I instead decided to create a data pipeline using popular Python modules & AWS services to extract my WordPress data using their API.

A data pipeline involves many aspects, which future posts will explore. This post focuses on extracting data from my WordPress database and storing it as flat files in AWS.

Firstly, I’ll discuss my architectural decisions for this part of the pipeline. Then I’ll examine the functions in my Python script that interact with AWS and perform data extraction. Finally, I’ll bring everything together and explain how it all works.

Architectural Decisions

In this section, I examine my architectural decisions and outline the pipeline’s processes.

Programming Language

My first decision concerned which programming language to use. I’m using Python here for several reasons:

- I use Python at work and am always looking to refine my skills.

- Several AWS services natively support Python.

- Python SDKs like Boto3 and awswrangler support my use case.

Data Extraction

Next, I chose what data to extract from my WordPress MySQL database. I’m interested in the following tables, which are explained in greater detail in 2023’s Deep Dive post:

In November I migrated amazonwebshark to Hostinger, whose MySQL remote access policy requires an IP address. While this isn’t a problem locally, AWS is a different story. I’d either need an EC2 instance with a static IP, or a Lambda function with several networking components. These are time and money costs I’d prefer to avoid, so no calling the database.

Fortunately, WordPress has an API!

WordPress API

The WordPress REST API lets applications interact with WordPress sites by sending and receiving data as JSON objects. Public content like posts and comments are publicly accessible via the API, while private and password-protected content requires authentication.

While researching options, I stumbled across MiniOrange‘s Custom API for WordPress plugin. It has a simple interface and a good feature list:

Custom API for WordPress plugin allows you to create WordPress APIs / custom endpoints / REST APIs. You can Fetch / Modify / Create / Delete data with an easy-to-use graphical interface. You can also build custom APIs by writing custom SQL queries for your WP APIs.

https://plugins.miniorange.com/custom-api-for-wordpress

This meant I could start using it straight away!

The free plan lets users create as many endpoints as needed. But it also has a pretty vital limitation – API key authentication is only possible on their Premium plan. In the free plan, all endpoints are public!

Now let me be clear – this isn’t necessarily a problem. After all, the WordPress API is public! And my WordPress data doesn’t contain any PII or sensitive data. No – the risk I’m trying to address here isn’t a security one.

Public endpoints can be called by anyone or anything at any time. With WordPress, they have dedicated, optimised resources that auto-scale on demand. Whereas I have one Hostinger server that is doing every site process. Could it be DDoSed into oblivion by tons of API calls from bad actors? Do I want to find out?

As I’m using the plugin’s free tier here, I’ll mitigate my risks by:

- Adding random strings to the endpoints to make them less guessable.

- Not showing the endpoints in my script or this post.

So ok – how will I get the API endpoints then?

Parameters

Next, I need to decide how my script will get the endpoints to query and the S3 bucket name to store the results.

With previous scripts, I’ve used features like gitignore and dot sourcing to hide parameters I don’t want to expose. While this works, it isn’t ideal. Dot sourcing breaks if the file paths change, and even with gitignore any credentials are still hardcoded into the script locally.

A better approach is to use a process similar to a password manager, where an authenticated user or role can request and receive credentials using secure channels. AWS has two services for this requirement: AWS Secrets Manager and AWS Systems Manager Parameter Store.

Secrets Manager Vs Parameter Store

Secrets Manager is designed for managing and rotating sensitive information like database credentials, API keys, and other types of secrets. Conversely, Parameter Store is designed for storing configuration data, including plaintext or sensitive information, in a hierarchical structure.

I’m using Parameter Store here for two reasons:

- My parameters are for configuration, and on their own won’t lead to account or service compromise. Therefore, I don’t need most of Secrets Manager’s features so it’s overkill for this scenario.

- My requirements fall within Parameter Store’s free tier. The same parameters in Secrets Manager would cost $0.40 per secret per month. $0.80 isn’t much, but it’s still an unnecessary expense for this scenario. It would also conflict with the Cost Optimization pillar of the AWS Well-Architected Framework.

Storage

Next, I need to decide where to store the API data. And I’m already using AWS for parameters, so I was always going to end up using S3. But what makes S3 an obvious fit here?

- Integration: S3 is one of the oldest and most mature AWS services. It is well supported by both the Python SDK and other AWS services like EventBridge, Glue and Athena for processing and analysis.

- Scalability: S3 will accept objects from a couple of bytes to terabytes in size (although if I’m generating terabytes of data here something is very wrong!). I can run my script at any time and as often as I want, and S3 will handle all the data it receives.

- Cost: S3 won’t be entirely free here because I’ll be creating and accessing lots of data during testing. But even so, I expect it to cost me pence. I’m not keeping versions at this stage either, so my costs will only be for the current objects.

Much has been written about S3 over the years, so I’ll leave it at this.

Use Of Flat Files

Finally, let’s examine the decision to store flat files in the first place. The data is already in a database – why duplicate it?

Decoupling: Putting raw data into S3 at an early stage of the pipeline decouples the database at that point. Databases can become inaccessible, corrupted or restricted. The S3 data would be completely unaffected by these database issues, allowing the pipeline to persist with the available data.

Reduced Server Load: Storing data in S3 means the rest of the pipeline reads the S3 objects instead of the database tables. This reduces the Hostinger server’s load, letting it focus on transactional queries and site processes. S3 is almost serving as a read replica here.

Security: It is simpler for AWS services to access data stored in S3 than the same data stored on Hostinger’s server. AWS services accessing server data require MySQL credentials and a whitelisted IP. In contrast, AWS services accessing S3 data require…an IAM policy.

Architectural Diagram

This is an architectural diagram of the expected process:

- User triggers the Python function.

- Python interacts with AWS Python SDK.

- SDK calls Parameter Store for WordPress & S3 parameters. These are returned to Python via the SDK.

- Python calls WordPress API. WordPress API returns data.

- Python writes API data to S3 bucket via the SDK.

Setup & Config

I completed some local and cloud configurations before I started writing my Python script to extract WordPress API data. This section explores my laptop setup and AWS infrastructure.

Local Machine

I’m using Windows 10 and WSL version 2 to create a Linux environment with the Ubuntu 22.04.3 LTS distribution. I’m using Python 3.12, with a fresh Python virtual environment for installing my dependencies.

AWS Data Storage

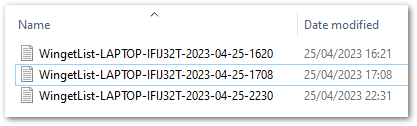

I already have an S3 bucket for ingesting raw data, so that’s sorted. I made a wordpress-api prefix in that bucket to partition the uploaded data.

This bucket doesn’t have versioning enabled because it has a high object turnover. Versioning is unneeded and could get very expensive without a good lifecycle policy! While this would be simple to do, it’s a wasted effort at this point in the pipeline.

Another factor against versioning is that I can recreate S3 objects from the MySQL database. As objects are reproducible, there is no need for the delete protection offered by versioning.

AWS Parameters

I’m using Parameter Store to hold two parameters: my S3 bucket name and my WordPress API endpoints. Each of these uses a different parameter type.

The S3 bucket name is a simple string that uses the String Parameter Type. This is intended for blocks of text up to 4096 characters (4kb). The API endpoints are a collection of strings generated by the WordPress plugin. I use the StringList Parameter Type here, which is intended for comma-separated lists of values. This lets me store all the endpoints in a single parameter, optimising my code and reducing my AWS API calls.

Python Script

In this section, I examine the various parts of my Python script that will extract data from the WordPress API. This includes functions, methods and intended functionality.

Advisory

Before continuing I want to make something clear. This advisory is on my amazonwebshark artefacts GitHub repo, but it bears repeating here too:

Artefacts within this post have been created at a certain point in my learning journey. They do not represent best practices, may be poorly optimised or include unexpected bugs, and may become obsolete.

If I find better ways of doing these processes in future then I’ll link to or update posts where appropriate.

Logging

Firstly, I’ll sort out some logging.

The logging module is a core Python library, so I can import it without a pip install command. I then use logging‘s basicConfig function to set my desired parameters:

logging.basicConfig(

level=logging.INFO,

format="%(asctime)s [%(levelname)s]: %(message)s",

datefmt="%Y-%m-%d %H:%M:%S"

)level sets the logging level to start at. logging.INFO records information about events like authentications, conversions and confirmations.

format sets how the logs will appear in the console. Sections enclosed by % and ( )s are placeholders that will be formatted as strings. Other characters are printed as-is. Here, my logs will return as Date/Time [Log Level]: Log Message.

datefmt sets the date/time format for format‘s asctime using the same directives as time.strftime().

These settings will give me logs in the style of:

2024-01-11 09:44:39 [INFO]: Parameter found.

2024-01-11 09:44:39 [INFO]: API endpoints returned.

2024-01-11 09:44:39 [INFO]: Getting S3 parameter...

2024-01-11 09:44:39 [WARNING]: S3 parameter not found!This lets me keep track of what stage Python is at when I extract WordPress API data.

boto3 Session

To call the AWS services I want to use, I need to create a boto3 session. This object represents a single connection to AWS, encapsulating options including the configuration settings and credentials. Without this, Python cannot access AWS Parameter Store, and so cannot extract WordPress API data.

To begin, I run pip install boto3 in the terminal. I then script the following:

import logging

import boto3

session = boto3.Session()This code snippet performs two new actions:

- Imports the

boto3module - Instantiates an instance of the

boto3module’sSessionclass.

As Session has no arguments, it will use the first AWS credentials it finds. In AWS, these will be from the Lambda function’s IAM role. No problems there. But I have several AWS profiles on my laptop, and my default profile is for a different AWS account!

In response, I can set an AWS profile using VSCode’s launch.json debugging object. By adding "env": {"AWS_PROFILE": "{my_profile_name}"} to the end of the configurations list, I can specify which local AWS profile to use without altering the Python script itself:

{

"version": "0.2.0",

"configurations": [

{

"name": "Python: Current File",

"type": "python",

"request": "launch",

"program": "${file}",

"console": "integratedTerminal",

"justMyCode": true,

"env": {"AWS_PROFILE": "profile"}

}

]

}Functions

This section examines my Python functions that extract WordPress API data. Each function has an embedded GitHub Gist and an explanation of the arguments and processes.

Get Parameters Function

Firstly, I need to get my parameter values from AWS Parameter Store.

Here, I define a get_parameter_from_ssm function that expects two arguments:

ssm_client: the boto3 client used to contact AWS.parameter_name: the name of the required parameter.

I use type hints to annotate parameter_name and the returned object type as strings. For a great introduction to type hints, take a look at this short video from AWS Mad Lad Matheus Guimaraes:

I then create a try except block containing a response object which uses the ssm_client.get_parameter function to try getting the requested parameter. If this fails, the AWS error is logged and a blank string is returned. The parameter value is returned if successful.

I am capturing the AWS exceptions using the botocore module because it provides access to the underlying error information returned by AWS services. When an AWS service operation fails, it usually returns an error response that includes details about what went wrong. botocore can access these responses programmatically and log more exception details than the Python default.

I now have two additional changes to my main script:

import logging

import boto3

import botocore

session = boto3.Session()

client_ssm = session.client('ssm')botocoreneeds to be imported, so I addimport botocoreto the script. I don’t need to installbotocorebecause it was installed withboto3.- I need a Simple Systems Manager (SSM) client to interact with AWS Systems Manager Parameter Store. I create an instance of the SSM client using my existing

sessionand assign it toclient_ssm. I can now useclient_ssmthroughout my script.

Get Filename Function

Next, I want to get each API endpoint’s filename. The filename has some important uses:

- Logging processes without using the full endpoint.

- Creating S3 objects.

A typical endpoint has the schema https://site/endpointname_12345/. There are two challenges here:

- Extracting the name from the string.

- Removing the name’s random characters.

I define a get_filename_from_endpoint function, which expects an endpoint argument with a string type hint and returns a new string.

Firstly, my name_full variable uses the rsplit method to capture the substring I need, using forward slashes as separators. This converts https://site/endpointname_12345/ to endpointname_12345.

Next, my name_full_last_underscore_index variable uses the rfind method to find the last occurrence of the underscore character in the name_full string.

Finally, my name_partial variable uses slicing to extract a substring from the beginning of the name_full string up to (but not including) the index specified by name_full_last_underscore_index. This converts endpointname_12345 to endpointname.

If the function is unable to return a string, an exception is logged and a blank string is returned instead.

No new imports are needed here. So let’s move on!

Call WordPress API Function

My next function queries a given API endpoint and handles the response.

Here, I define a get_wordpress_api_json function that expects three arguments:

requests_sessionapi_url: the WordPress API URL with a string type hint.api_call_timeout: the number of seconds to wait for a response before timing out.

requests.Session is a part of the Requests library, and creates a session object that persists across multiple requests. I can now use the same session throughout the script instead of constantly creating new ones.

I open a try except block and create a response object. requests.Session attempts to call the API URL. If the response status code is 200 OK then the response is returned as a raw JSON dictionary.

This function can fail in three ways:

- The status code isn’t 200. While this includes 3xx, 4xx and 5xx codes, it also includes the other 2xx codes. This was deliberate, as any 2xx responses other than 200 are still unusual, and something I want to know about.

- The API call times out.

Requeststhrows an exception.

In all cases, the function raises an exception and doesn’t proceed. This was a conscious choice, as an API call failure represents a critical and unrecoverable problem with the WordPress API that should ring alarm bells.

As I’m using the Requests module now, I need to run pip install requests in the terminal and add import requests to my script. I then create my requests session in the same way as my boto3 session.

I’m also now using json – another pre-installed core Python module ready for import:

import logging

import json

import requests

import boto3

import botocore

session = boto3.Session()

client_ssm = session.client('ssm')

requests_session = requests.Session()S3 Upload Function

Finally, I need to put my JSON data into S3

I define a put_s3_object function that expects four arguments:

s3_client: the boto3 client used to contact AWS.bucket: the S3 bucket to create the new object inname: the name to use for the new objectjson_data: the data to upload

I give string type hints to the bucketname and json_data arguments. This is especially important for json_data because of what I plan to do with it.

I open a try except block and try to use put_s3_object to upload the JSON data to S3. In this context:

Bodyis the JSON data I want to store.Bucketis the S3 bucket name from AWS Parameter Store.Keyis the S3 object key, using an f-string that includes the name from myget_filename_from_endpointfunction.

The JSON data is created by my get_wordpress_api_json function, which returns that data as a dictionary. Passing a dictionary to put_s3_object‘s Body argument will throw a parameter validation error because its type is invalid for the Body parameter. json_data‘s string type hint will help prevent this scenario.

Moving on, the S3 client’s put_object function attempts to upload the data to the S3 bucket’s wordpress-api prefix as a new JSON object. If this operation succeeds, the function returns True. If it fails, a botocore exception is logged and the function returns False.

While no new imports are needed, I do now need an S3 client alongside the SSM one to allow S3 interactions:

session = boto3.Session()

client_ssm = session.client('ssm')

client_s3 = session.client('s3')

requests_session = requests.Session()Script Body

This section examines the body of my Python script. I look at the script’s flow, the objects passed to the functions and the responses to successful and failed processes.

Variables

In addition to the imports and sessions already listed, I have some additions:

- The S3 bucket and WordPress API Parameter Store names.

- An

api_call_timeoutvalue for the WordPress API requests in seconds. - Three endpoint counts used for monitoring failures, successes and overall progress.

# Parameter Names

parametername_s3bucket = '/s3/lakehouse/name/raw'

parametername_wordpressapi = '/wordpress/amazonwebshark/api/mysqlendpoints'

# Counters

api_call_timeout = 30

endpoint_count_all = 0

endpoint_count_failure = 0

endpoint_count_success = 0

Getting The Parameters

The first part of the script’s body handles getting the AWS parameters.

Firstly, I pass my SSM client and WordPress API parameter name to my get_parameter_from_ssm function.

If successful, the function returns a comma-separated string of API endpoints. I transform this string into a list using .split(",") and assign the list to api_endpoints_list. Otherwise, an empty string is returned.

This empty string is unchanged by .split(",") and is assigned to api_endpoints_list. This is why get_parameter_from_ssm returns a blank string if it hits an exception. split(",") has no issues with a blank string, but throws attribute errors with returns like False and None.

I then check if api_endpoints_list contains anything using if not any(api_endpoints_list). return ends the script execution if the list contains no values, otherwise the number of endpoints is recorded.

A similar process happens with the S3 bucket parameter. My get_parameter_from_ssm function is called with the same SSM client and the S3 parameter name. This time a simple string is returned, so no splitting is needed. This string is assigned to s3_bucket, and if it’s found to be empty then return ends the current execution.

If both api_endpoints_list and s3_bucket pass their tests, the script moves on to the next section.

Getting The Data

The second part of the script’s body handles getting data from the API endpoints.

Firstly, I open a for loop for each endpoint in api_endpoints_list. I pass each endpoint to my get_filename_from_endpoint function to get the name to use for logging and object creation. This name is assigned to object_name.

object_name is then checked. If found to be empty, the loop skips that endpoint to prevent any useless API calls and to preserve the existing S3 data. The failure counter increments by 1, and continue ends the current iteration of the for loop.

Once the name is parsed, my Requests session, timeout values and current API endpoint are passed to the get_wordpress_api_json function. This function returns a JSON dictionary that I assign to api_json. api_json is then checked and, if empty, skipped from the loop using continue.

Next, I need to transform the api_json dictionary object before an S3 upload attempt. If I pass api_json to S3’s put_object as is, the Body parameter throws a ParamValidationError because it can’t accept dictionaries. I use the json.dumps function to transform api_json to a JSON-formatted string and assign it to api_json_string, which put_object‘s Body parameter can accept.

I can now pass my S3 client, S3 bucket name, object_name and api_json_string to my put_s3_object function. This function’s output is assigned to ok, which is then checked and updates the success or failure counter as appropriate.

Once all APIs are processed, the loop ends and the final success and failure totals are logged.

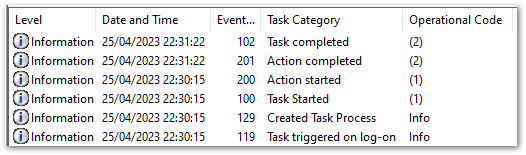

Adding A Handler

Finally, I encapsulate the script’s body into a lambda_handler function. Handlers let AWS Lambda run invoked functions, so I’ll need one when I deploy my script to the cloud.

Resources

The full Python script has been checked into the amazonwebshark GitHub repo, available via the button below. Included is a requirements.txt file for the Python libraries used to extract the WordPress API data.

Summary

In this post, I used popular Python modules & AWS managed serverless services to extract WordPress API data.

I took a lot away from this! The script was a good opportunity to practise my Python skills and try out unfamiliar features like type hints, continue and requests.Session. Additionally, I made several revisions to control flows, logging and error handling that were triggered by writing this post. The script is clearer and faster as a result.

With the script complete, my next step will be deploying it to AWS Lambda and automating its execution. So keep an eye out for that! If this post has been useful, the button below has links for contact, socials, projects and sessions:

Thanks for reading ~~^~~