In this post, I use the free YearCompass booklet to reflect on 2023 and to plan some professional goals for 2024.

Table of Contents

Introduction

Towards the end of last year, I used YearCompass for the first time because I wanted to commit to some 2023 goals. YearCompass is a proven and long-lived framework with over 18k Facebook Likes and availability in 52 languages, so it made sense to try it out.

It went very well! So much so that I have used YearCompass again to choose my 2024 professional goals. The first half of this post covers 2023; the second half 2024.

Firstly, let’s examine YearCompass itself.

YearCompass

From the YearCompass site:

YearCompass is a free booklet that helps you reflect on the year and plan the next one. With a set of carefully selected questions and exercises, YearCompass helps you uncover your own patterns and design the ideal year for yourself.

YearCompass.com

YearCompass started as a reflection tool for a small group of friends and was made publicly available in 2012. It is available as an A4 and A5 PDF, with options to fill out the booklet both digitally and by hand. YearCompass is currently available in 52 languages.

YearCompass positions itself as an alternative to New Year’s Resolutions. Each PDF has two sections. The first half examines the previous year and the second half considers the next one.

Each section consists of a series of prompts and questions. These guide the user through the reflection process and help them identify their priorities and plan for the future.

Some of the questions are:

- What are you most proud of?

- Who are the three people who influenced you the most?

- What does the year ahead of you look like?

While prompts include:

- List your three biggest challenges from last year.

- This year, I will be bravest when…

- I want to achieve these three things the most.

There are no hard and fast rules for completing YearCompass. The book suggests a break between sections, although some prefer to do the whole thing in one sitting. Personally, I don’t complete every section as by a certain point I have what I need.

This year, I had my 2022 and 2023 YearCompass PDFs open side by side. It made sense for 2022’s compass to inform 2023’s, and it gave me an idea of which goal-setting approaches worked best.

2023 Retrospective

In this section, I look back at my 2023 goals and see how things went with them.

Confidence Building & Anxiety Management

This goal was focused on self-belief. I wanted to bolster my confidence and improve my technical skillset.

2022 saw my first tech conference at AWS Summit London. While London is an intense experience for a socially anxious shark, it successfully expanded my comfort zone by putting me around unfamiliar faces with similar interests.

2023’s summit was easier on the senses, and I had chats with suppliers and AWS Solution Architects about topics including data lineage, lakehousing and event orchestration.

I also attended some events closer to home: May’s DTX Manchester and October’s Data Relay Manchester.

Separately, I used my DataCamp subscription to improve my Data Engineering skills. Their Data Engineer track has several great Python courses and helped me benchmark my T-SQL skills. Their Object-Oriented Programming in Python and Writing Functions in Python courses also helped me plug some work-related gaps.

Helpfully, DataCamp has a new My Year In Data feature that summarises the 17 courses I completed this year:

Finally, I also recertified my AWS SysOps Administrator certification in October using the now-traditional duo of Stephane Maarek and Tutorials Dojo. This certification validates my experience in deploying, managing, and operating workloads on AWS and puts me in a good position for my 2024 goals.

Collaborating & Communicating

This goal was focused on finding my voice and improving my work quality. I wanted to strengthen my contributions and increase my value.

A big part of this was making sure that I understood the languages and terms being used around me. My ultimate aim was to use and apply these terms correctly and appropriately. 2023 was the year I became familiar with data and programming terms including:

I also signed up for some Data Engineering-focused feeds to improve my industry knowledge. Examples include the Data Engineering Weekly and Seattle Data Guy newsletters, and the Advancing Analytics and nullQueries YouTube channels.

Collaboration-wise, I also hosted my first T-SQL Tuesday this year. It was great to work with Steve Jones, and I get to include myself on a pretty illustrious list of industry professionals now!

Finally, I also made it to my first User Group meeting! While I only made it to one event this year, I overcame a lot of personal anxiety there and look forward to exploring my local user groups more in 2024.

Knowledge Sharing & Presenting

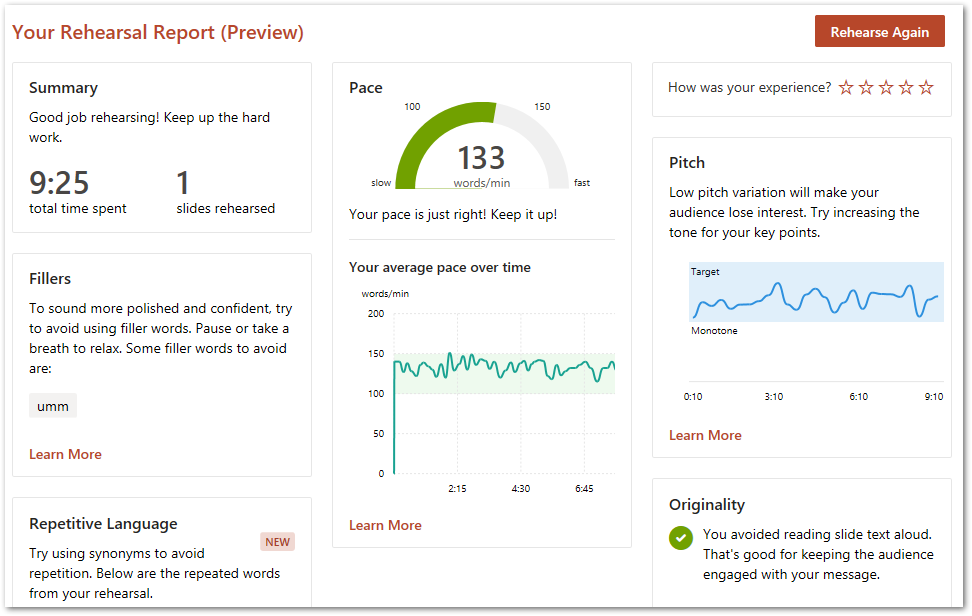

This goal was focused on creating value. I wanted to improve my presentation skills, and find real-world applications for the knowledge gained from my posts and certifications.

It’s time to discuss New Stars of Data!

Upon viewing the event’s Call For Speakers, I saw a great chance to work on this goal. I’d already started writing a VSCode Data Wrangler post at the time (which ultimately became the New Stars Of Data Retrospective post) and quickly realised the post would lend itself very well to the requested abstract.

When creating the session, the combination of a sport I enjoy, data and code I’m familiar with and an impressive VSCode extension resulted in a smooth journey from storyboarding to delivery. The session was a pleasure to create and deliver, and was exactly the presenting introduction I was after!

I also wrote a blog series while creating the session, both as something to look back on and to potentially help future speakers.

2024 Goals

In this section, I use YearCompass to decide on my 2024 professional goals. For each goal, I’ll write a user story and then explain my reasoning.

Build Technology Projects

As a cloud enthusiast, I want to complete valuable project builds so that I can develop and validate my knowledge and skills, and have subject matter for future session abstracts.

It’s fair to say that 2023 has been a year of learning, with sources including:

- Books, magazines and websites.

- Learning and revision portals.

- Olivier Van Steenlandt‘s New Stars Of Data mentorship.

All of which have given me ideas for things I can build! Some completely new things. Some things that have been gaining steam for a while. Other things that recent innovations have put within reach.

My first YearCompass 2024 goal is to start building them! As well as testing my skills and validating my knowledge, some of these projects would probably lend themselves to a session abstract or two!

Additionally, I’m considering studying towards an AWS Professional certification in 2025. So if I decide to go ahead with that, building a bunch of stuff would be well worth the effort and investment!

Finally, although I’ve gotten better at finishing projects since starting amazonwebshark there’s always room for improvement. This No Boilerplate video about The Cult Of Done Manifesto really resonated with me upon first watch, and I’ll be benchmarking my 2024 projects against it.

Build My Personal Brand

As an IT professional I want to build my personal brand so that I improve my soft skills and define my public image.

Continuing the build theme, my second YearCompass 2024 goal is focused on my soft skills and visibility.

I’ve spoken about confidence and anxiety previously. I will always be working on this, but it isn’t something I want to hide behind. As my contributions to this site and the wider community grow, I need to consider how those contributions influence the projected image of my personality, skills, experience, and behaviour.

Furthermore, in an age where AI tools and models are getting increasingly adept at a range of tasks, practising and demonstrating my soft skills is perhaps more important than ever. As technology becomes increasingly democratised, it is no longer enough to focus on technical skills alone.

I’ve already begun to establish my personal brand via amazonwebshark and social media. With my 2024 goals likely to put me in front of more fresh faces for the first time, now is definitely the time to make my personal brand a primary focus.

Build A Second Brain

As just a normal man I want to build a second brain so that I can organise my resources and work more efficiently.

For my final YearCompass 2024 goal, I want to take steps to solve a long-standing problem.

I have lots of stuff. Books, files, hyperlinks, videos…STUFF. Useful stuff, but unorganised and unstructured stuff. I also have lots of ideas. Ideas for efficiency and growth. Ideas for reliability and resilience. And I have various ways of capturing these ideas depending on where I happen to be. Even my car has a notepad.

Finally, and perhaps most importantly, I have several partially-enacted systems for handling all of this. Some systems turned out to be unfit for purpose. Some were overwhelmed, while others became unwieldy.

Recently, I’ve made efforts to organise and define everything. I’m already finding success with this, and with the recent discovery of the Second Brain and CODE methodologies I now have a framework to utilise. A well-built second brain will help organise my backlog, assist my day-to-day and support my future goals.

Summary

In this post, I used the free YearCompass booklet to reflect on 2023 and to plan some professional goals for 2024.

Having finished this post, I’m happy with my 2024 goals and am looking forward to seeing where the year takes me! I’ll post updates here and via my social, project and session links, which are available via the button below:

Thanks for reading ~~^~~